Code

library(tidyverse)

library(tidymodels)

library(gt)

set.seed(1234)

require(doParallel)

cores <- parallel::detectCores(logical = FALSE)Matt Waite

March 22, 2021

I do not know a lot about college basketball. I follow the travails of my employer and a little about the Big Ten Conference as a whole, but at best it’s surface knowledge. I kinda know who is good that we’re going to play and who isn’t. Beyond that, nada.

Which is bad when it comes to tournament time.

My typical pattern of filling out bracket is Have I Heard Of This Team, Do They Have a Legendary Coach or Do I Hate Them For Some Reason. Depending on the answers, I make my pick. It’s not rocket science, and it rarely works.

This season, along with my SPMC460 Advanced Sports Data Analysis class, I decided to try use machine learning to get me a better bracket. The class is about the use of machine learning in sports, and so we’re going to use classification algorithms to decide a simple W or L designation.

What follows is the logic and the code I used to fill out my bracket.

How did it go?

Not great. Could I have done better guessing? Doubtful.

What I’m using to make and feed the model is the Tidyverse and Tidymodels set of libraries, the gt library for presenting some tables later, and doParallel for parallel processing, because the xgboost model training takes a while.

The data I’m using is scraped from Sports Reference and it’s the box scores of every Division I college basketball game since the start of the 2014-2015 season. The data is a little funny in that each game is in there twice – I’m scraping school pages, so the Team is always that school, and the Opponent is someone else. So a game between two Division I schools will appear twice – one for each Team.

My logic in picking predictors was that how efficient teams are with the ball is important, so I estimated the number of possessions and then calculated offensive and defensive ratings (which is points per 100 possessions).

I then wanted some kind of a metric of how good of a win or how bad of a loss a particular game was. So I calculated the score margin and added it to the opponent’s simple rating from Sports Reference. So a team losing close to a good team isn’t a bad loss. A bad team beating a good team is a great win. And so on. So if you’re a team beating up on bad teams, you don’t get a lot of credit for that.

Then, I used the teams cumulative mean over the course of the season to estimate what they would have going into the game. Obviously, you can’t know how a team will play going into a game, but I figure that they’ll be somewhere around their cumulative mean, which should pick up if the team is playing better or worse over a few games.

Then, for tournament purposes, I cut that to the last 10 games of the season. You are who you are in your last 10 games before the end of the season.

At least, that was my thinking.

games <- read_csv("http://mattwaite.github.io/sportsdatafiles/cbblogs1521.csv") %>% mutate(

Possessions = .5*(TeamFGA - TeamOffRebounds + TeamTurnovers + (.475 * TeamFTA)) + .5*(OpponentFGA - OpponentOffRebounds + OpponentTurnovers + (.475 * OpponentFTA)),

OffensiveRating = (TeamScore/Possessions)*100,

DefensiveRating = (OpponentScore/Possessions)*100,

ScoreDifference = TeamScore - OpponentScore,

WinQuality = case_when(is.na(OpponentSRS) == TRUE ~ ScoreDifference, TRUE ~ ScoreDifference + OpponentSRS)

) %>%

group_by(Team, Season) %>%

mutate(

Cumulative_Mean_Offensive = cummean(OffensiveRating),

Cumulative_Mean_Defensive = cummean(DefensiveRating),

Cumulative_Mean_WinQuality = cummean(WinQuality)

) %>%

filter(between(Game, max(Game)-10, max(Game))) %>%

ungroup() %>%

mutate(

Outcome = case_when(

grepl("W", W_L) ~ "W",

grepl("L", W_L) ~ "L"

)

) %>%

mutate(Outcome = as.factor(Outcome)) Rows: 64866 Columns: 48

── Column specification ────────────────────────────────────────────────────────

Delimiter: ","

chr (8): Season, TeamFull, Opponent, HomeAway, W_L, URL, Conference, Team

dbl (39): Game, TeamScore, OpponentScore, TeamFG, TeamFGA, TeamFGPCT, Team3...

date (1): Date

ℹ Use `spec()` to retrieve the full column specification for this data.

ℹ Specify the column types or set `show_col_types = FALSE` to quiet this message.Then, to get both sides of a match-up to be the correct stats, I used some joining to combine them into a single dataset with the cumulative stats for each side that will then use to train a model.

selectedgames <- games %>%

select(

Season, Team, Date, Opponent, Outcome, Cumulative_Mean_Offensive, Cumulative_Mean_Defensive, Cumulative_Mean_WinQuality, TeamSRS, TeamSOS)

opponentgames <- selectedgames %>%

select(-Opponent) %>%

rename(

Opponent = Team,

Opponent_Cumulative_Offensive = Cumulative_Mean_Offensive,

Opponent_Cumulative_Mean_Defensive = Cumulative_Mean_Defensive,

Opponent_Cumulative_Mean_WinQuality = Cumulative_Mean_WinQuality,

OpponentSRS = TeamSRS,

OpponentSOS = TeamSOS

)

bothsides <- selectedgames %>%

left_join(opponentgames, by=c("Opponent", "Date", "Season")) %>%

na.omit() %>%

select(-Outcome.y) %>%

rename(Outcome = Outcome.x)There’s a growing supply of tutorials on how to use tidymodels to do machine learning, and one of the authors of the library, Julia Silge, has a long list of posts that greatly helped me figure this all out.

To start the modeling processing, I’m going to split my data into training and testing sets.

I then created a simple recipe, which doesn’t do much except set aside some fields as ID fields instead of making them predictors.

# A tibble: 15 × 4

variable type role source

<chr> <chr> <chr> <chr>

1 Season nominal ID original

2 Team nominal ID original

3 Date date ID original

4 Opponent nominal ID original

5 Cumulative_Mean_Offensive numeric predictor original

6 Cumulative_Mean_Defensive numeric predictor original

7 Cumulative_Mean_WinQuality numeric predictor original

8 TeamSRS numeric predictor original

9 TeamSOS numeric predictor original

10 Opponent_Cumulative_Offensive numeric predictor original

11 Opponent_Cumulative_Mean_Defensive numeric predictor original

12 Opponent_Cumulative_Mean_WinQuality numeric predictor original

13 OpponentSRS numeric predictor original

14 OpponentSOS numeric predictor original

15 Outcome nominal outcome originalThen I define my model, which I am going to tune all of the hyperparameters in an xgboost model later.

With a recipe and a model definition, I can create a workflow, which will now handle a whole lot of chores for me.

To tune my hyperparameters, I am going to use a Latin Hypercube, which is a method for generating near-random samples of paremeters to try.

To test these hyperparemeters, I am going to make some cross-fold valiations samples that we can use.

And now comes the part that makes my laptop fan turn into a jet engine. The next block uses parallel processing to try the 30 samples from the Latin Hypercube and tests it against my cross fold validation samples. It … takes a while.

But out of it, we get the best combination of hyperparameters to use as inputs into our model. I’m going to use area under the curve as my evaluation metric to determine what is best.

And I can now feed that into my final workflow.

And I can now train a model to use on bracket games.

So how does this model do?

# A tibble: 2 × 3

.metric .estimator .estimate

<chr> <chr> <dbl>

1 accuracy binary 0.719

2 kap binary 0.438Against my training set, not bad. I can predict the correct outcome of a basketball game better than 70 percent of the time.

How about against data the model hasn’t seen yet?

# A tibble: 2 × 3

.metric .estimator .estimate

<chr> <chr> <dbl>

1 accuracy binary 0.705

2 kap binary 0.411Just about the same, which means my model is robust to new data.

I have made a machine learning model that is better at this than I could be.

Mission accomplished.

I think.

I’m not going to bore you with the tedium of applying this to every game in each round. My notebook that does it all is almost 900 lines of code long, and this post is already getting long. But here’s an example of what it looks like using the play-in games.

To do this, I needed to make a tibble of the games, with the team and opponent. The date doesn’t matter, but it’s needed because my model is expecting it.

Then, I need to get the right data for each team and join it to them so each game has the predictors the model is expecting. Then, using the model, we can predict the outcome.

playin <- tibble(

Team="Norfolk State",

Opponent="Appalachian State",

Date = as.Date("2021-03-19")

) %>% add_row(

Team="Wichita State",

Opponent="Drake",

Date = as.Date("2021-03-19")

) %>% add_row(

Team="Mount St. Mary's",

Opponent="Texas Southern",

Date = as.Date("2021-03-19")

) %>% add_row(

Team="Michigan State",

Opponent="UCLA",

Date = as.Date("2021-03-19")

)

playingames <- selectedgames %>%

group_by(Team) %>%

filter(Date == max(Date), Season == "2020-2021") %>%

select(-Date, -Opponent, -Outcome) %>%

right_join(playin)Joining, by = "Team"playingames <- opponentgames %>%

group_by(Opponent) %>%

filter(Date == max(Date)) %>%

ungroup() %>%

select(-Season, -Date, -Outcome) %>%

right_join(playingames, by=c("Opponent")) %>%

select(Team, everything())

playinround <- xg_fit %>%

predict(new_data = playingames) %>%

bind_cols(playingames)

playinround <- xg_fit %>%

predict(new_data = playinround, type="prob") %>%

bind_cols(playinround)

playinround %>% select(Team, .pred_class, Opponent, .pred_L) %>%

gt() %>%

opt_row_striping() %>%

opt_table_lines("none") %>%

tab_style(

style = cell_borders(sides = c("top", "bottom"),

color = "grey", weight = px(1)),

locations = cells_column_labels(everything())

)| Team | .pred_class | Opponent | .pred_L |

|---|---|---|---|

| Norfolk State | L | Appalachian State | 0.5542561 |

| Wichita State | L | Drake | 0.5503606 |

| Mount St. Mary's | W | Texas Southern | 0.2786840 |

| Michigan State | L | UCLA | 0.5978317 |

Since these games have already happened, we know the outcome, and my model got 3 of 4 correct. The only miss was predicting Norfolk State would win, but it also happens to be the game the model has the least amount of confidence in.

This might actually work.

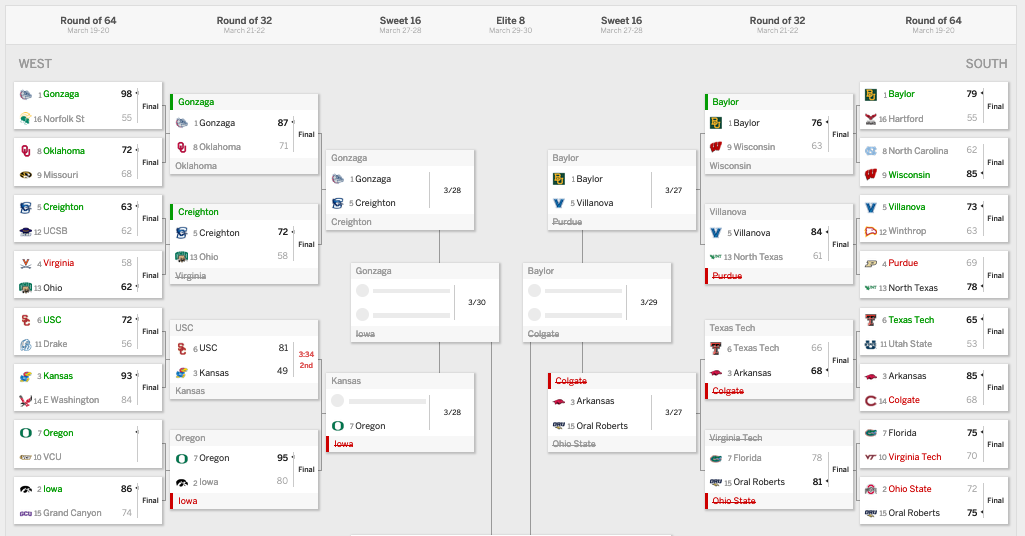

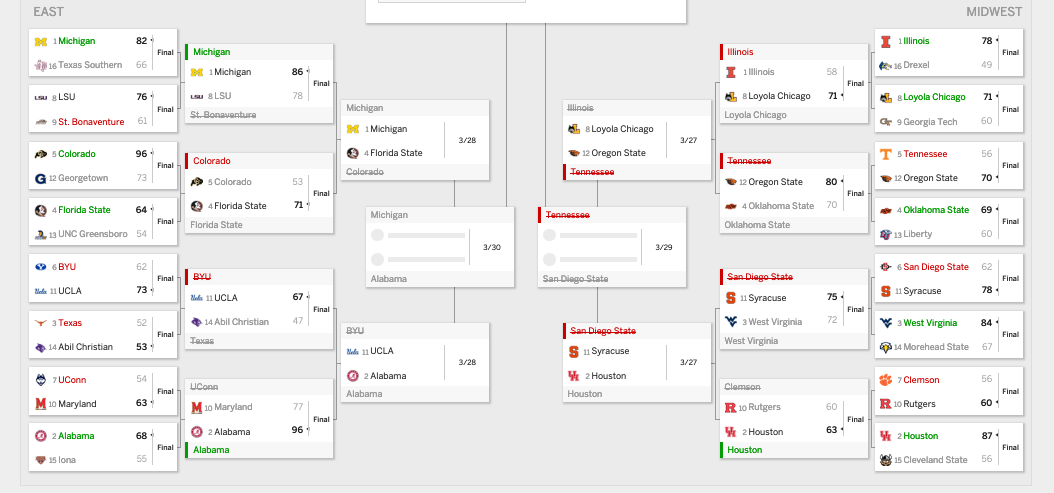

In a word: horrible.

After two rounds, my bracket is better than 38 percent of brackets on ESPN, which puts me in 9.1 millionth place, give or take. I’ve been as low as 10.8 millionth place, so I’ve come up a bit. I still have three of my four Final Four teams and four of eight Elite Eight teams.

When the dust has settled, I’m going to come back and evaluate. Here’s screenshots of my bracket.